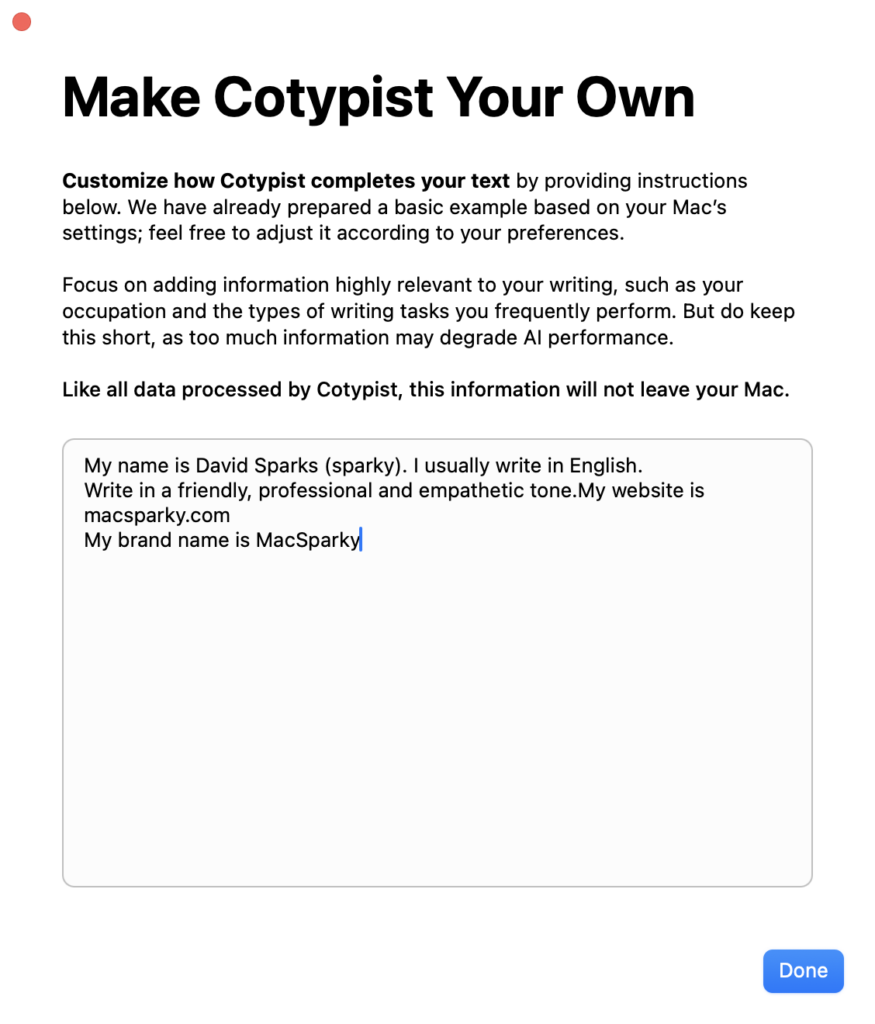

There are a lot of angles to AI and productivity emerging right now. One I’ve come to appreciate is AI-based smarter autocomplete. My tool of choice for this is Cotypist. It’s made by a trusted Mac developer, it’s fast, and it takes privacy seriously.

Unlike many AI writing tools that require you to work within their specific interface, Cotypist works in virtually any text field across your Mac. Whether you’re drafting an email, writing in your favorite text editor, or filling out a form, Cotypist is there to help speed up your writing.

The app’s latest version (0.7.2) brings notable improvements to both performance and completion quality. It even respects your Mac’s Smart Quotes preferences – a small but meaningful touch that shows attention to detail.

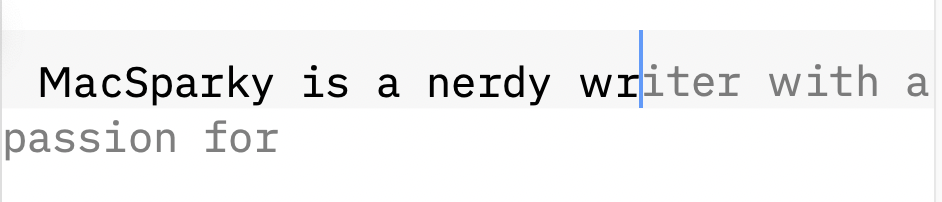

With Cotypist turned on, it offers inline completions that appear in real time. Then you’ve got a few options:

- You could just ignore the suggestion and keep typing like you’ve always done.

- If you want to accept the full multi-word suggestion, you press a user-defined key. (I use the backtick – just above the Tab key on a US keyboard.)

- If you just want to accept the next suggest word, you hit another user-defined key (I use Tab)

- If you want to dismiss the suggestion entirely, press escape. (This is handy when doing online forms, for instance.)

At first, the constant suggestions may feel distracting, but once I adapted to it, I can’t imagine going back.

Cotypist generates all completions locally on your Mac. No cloud services, no data sharing – just your Mac’s processing power working to speed up your writing.

Like I said, Cotypist represents an interesting take on AI and is worth checking out.