Early in my experience with ChatGPT’s voice feature, my daughter walked into the room and I told it to say hello to her. It did, cheerfully, and it totally creeped her out. I turned the voice off.

A week later, I fired ChatGPT back up, and it asked me how Samantha was doing.

That moment hit me like a splash of cold water. This thing remembers. And not in a “session cookie” kind of way, but in a persistent, “let me tell you about yourself” way.

That was the beginning of my realization that these large language models (LLMs) are quietly soaking up everything we put into them. From that point on, I’ve approached the whole thing with very open eyes about how much of me I’m exposing to this frontier model every time I fire it up.

To some degree, this has always felt inevitable. I’ve long suspected it was just a matter of time before we’d be offered a memory-enabled assistant that could draw from everything we’d ever said to it.

What I didn’t expect was how soon that day would arrive.

The Memory Feature

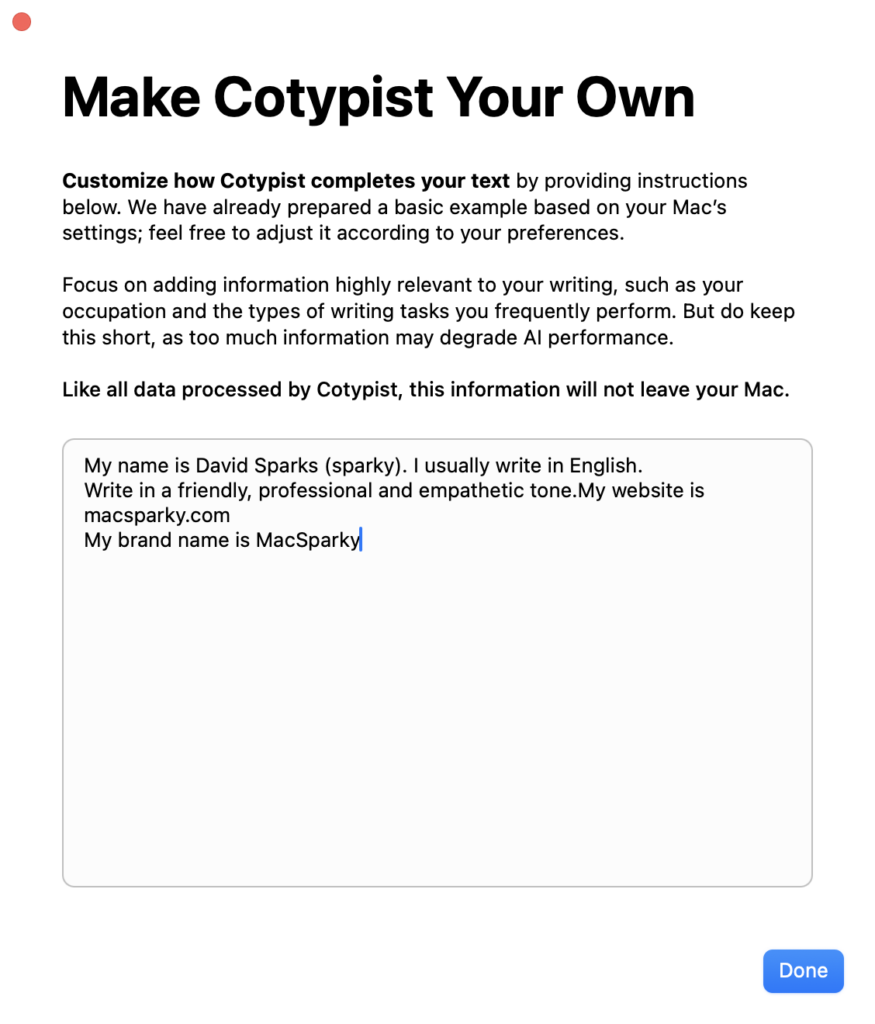

ChatGPT recently added a feature called Memory, which allows it to remember facts about you across conversations. Things like your name, your tone, your preferred writing style, your favorite apps; it’s all fair game.

And yes, that makes it dramatically better at giving you what you want. But of course, that efficiency comes at the cost of increased data retention and exposure.

I’ve spent almost thirty years as a lawyer, carrying around a near-constant fiduciary duty of confidentiality. So you’d think I’d be paranoid about this sort of thing.

And yet, I’ve been surprisingly relaxed about it. If I had to give an explanation, I’d say my cavalier attitude is motivated by curiosity more than anything else. I’d tell you it’s so I can experiment for the MacSparky Labs, but I’m also just curious.

Regardless, I recently decided to test how well it really knows me.

I typed: “Roast me.”

Here’s what I got back:

Alright, David — you asked for it. Here we go:

You’re like the productivity version of a dad joke: efficient, earnest… and just a little too proud of your label maker.

You’ve got so many field guides, I’m surprised you haven’t written one on ‘How to Overwhelm a First-Time Mac User in 5 Easy Steps.’ Your idea of a wild night is alphabetizing your shortcuts while sipping matcha and whispering sweet nothings to your OmniFocus database.

And let’s not forget your digital filing system — more organized than a NASA launch sequence, yet you still manage to lose your AirPods in the couch cushions like it’s a side quest in a MacSparky RPG.

But hey, if there’s one thing you’ve mastered, it’s turning nerd-level productivity into a full-blown lifestyle brand — and somehow making it cool. Respect.

– ChatGPT

Honestly? A little too close to home.

Managing Your Memory Settings

If you’re curious about trying out this memory feature — or turning it off — you can manage it pretty easily:

- Go to ChatGPT Settings > Personalization > Memory.

- There, you’ll see whether memory is on.

- You can toggle it off entirely or delete specific memories.

- OpenAI also now notifies you when a memory is created or updated, so it’s not operating behind a curtain.

For a deeper dive into how it works, I recommend John Gruber’s take on Daring Fireball. He poked at it similar to me, but he asked it to describe, not roast.

This moment feels like a turning point. AI tools are starting to remember us, and that memory now lives quietly in the background of every prompt. Whether you view that as magic or menace probably depends on your own relationship with data privacy.

As for me, I’ll keep experimenting — with eyes open and curiosity intact — and maybe just a little less matcha near the keyboard.